PostgreSQL Performance Meltdown?

Overview

Test setup

Test details

- Old kernel version: 3.10.0-514.el7.x86_64

- New kernel version, as-is: 3.10.0-693.11.6.el7.x86_64-pti-pcid

- New kernel, with nopcid: 3.10.0-693.11.6.el7.x86_64-pti-nopcid

- New kernel, with nopti: 3.10.0-693.11.6.el7.x86_64-nopti-pcid

- New kernel, with nopti nopcid: 3.10.0-693.11.6.el7.x86_64-nopti-nopcid

Results

|

Alias

|

TPS (1GB)

|

TPS (8GB)

|

TPS (28GB)

|

|

old

|

92995

|

80554

|

107144

|

|

pti pcid

|

88394 (-4.9%)

|

77586 (-3.7%)

|

102839 (-4.0%)

|

|

pti nopcid

|

83216 (-10.5%)

|

74947 (-7.0%)

|

98653 (-7.9%)

|

|

nopti pcid

|

90772 (-2.4%)

|

79120 (-1.8%)

|

105726 (-1.3%)

|

|

nopti nopcid

|

91387 (-1.7%)

|

78856 (-2.1%)

|

105593 (-1.4%)

|

There are no general directions on whether to disable PTI, after all, its goal is to close HW-based bug. In cases, when server is dedicated to the database alone and it is a physical machine (not VM or container), it seems fine to use nopti parameter and get a better performance.

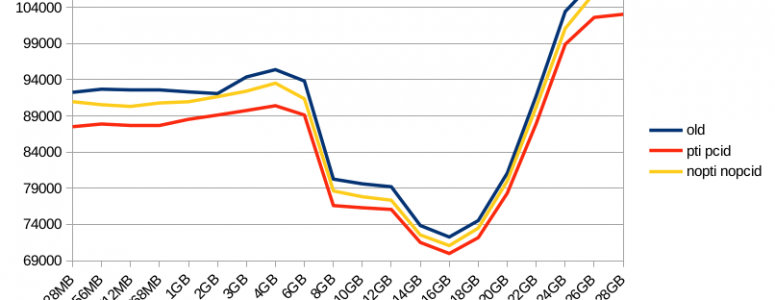

Here’s a graph, that shows TPS for the old kernel, new (PTI-enabled) one and new kernel with PTI and PCID features disabled: